Algorithmic Governance and the Crisis of Accountability

How Decision-Making Was Automated While Responsibility Disappeared

Abstract

Algorithmic governance is increasingly presented as a solution to complexity, inefficiency, and human bias in public decision-making. Governments and institutions rely on algorithmic systems to allocate resources, assess risk, predict behavior, and guide policy choices. This paper argues that while algorithmic governance promises rationality and objectivity, it simultaneously produces a profound crisis of accountability. As decisions are delegated to models, responsibility becomes fragmented, obscured, or entirely displaced. By examining the structural logic of algorithmic governance, this research demonstrates how accountability erodes not because of technical failure, but because of institutional design choices that prioritize efficiency, insulation, and depoliticization over democratic responsibility.

1. Introduction: Governance Without Governors

Governance traditionally implies identifiable authority: institutions, officials, and procedures that can be questioned, challenged, and held responsible. Algorithmic governance disrupts this foundation.

Across public and private sectors, algorithms now:

- assess eligibility for welfare and social services

- rank risk in policing and migration

- guide regulatory enforcement

- inform budgetary and policy priorities

Yet when outcomes are contested, responsibility is often deferred to “the system.” This paper asks:

Who governs when governance is automated?

2. What Is Algorithmic Governance?

Algorithmic governance refers to the use of automated systems to:

- structure decision processes

- prioritize policy options

- regulate access to rights and services

- manage populations through prediction and scoring

It does not eliminate human actors, but repositions them as overseers of systems rather than authors of decisions.

Governance shifts from:

deliberation → optimization

judgment → prediction

responsibility → procedural compliance

3. The Appeal of Algorithms in Governance

The adoption of algorithmic governance is driven by powerful incentives:

3.1 Complexity Management

Algorithms promise to manage social complexity through quantification and abstraction.

3.2 Political Insulation

Delegating decisions to models allows institutions to deflect blame and controversy.

3.3 Administrative Efficiency

Automation reduces cost, speeds up processing, and standardizes outcomes.

These benefits come at a cost rarely acknowledged: the erosion of accountability.

4. Accountability: A Structural Definition

Accountability is not merely transparency. It requires:

- identifiable decision-makers

- explainable reasoning

- contestability

- consequences for error or harm

Algorithmic governance undermines each of these pillars.

5. How Accountability Collapses

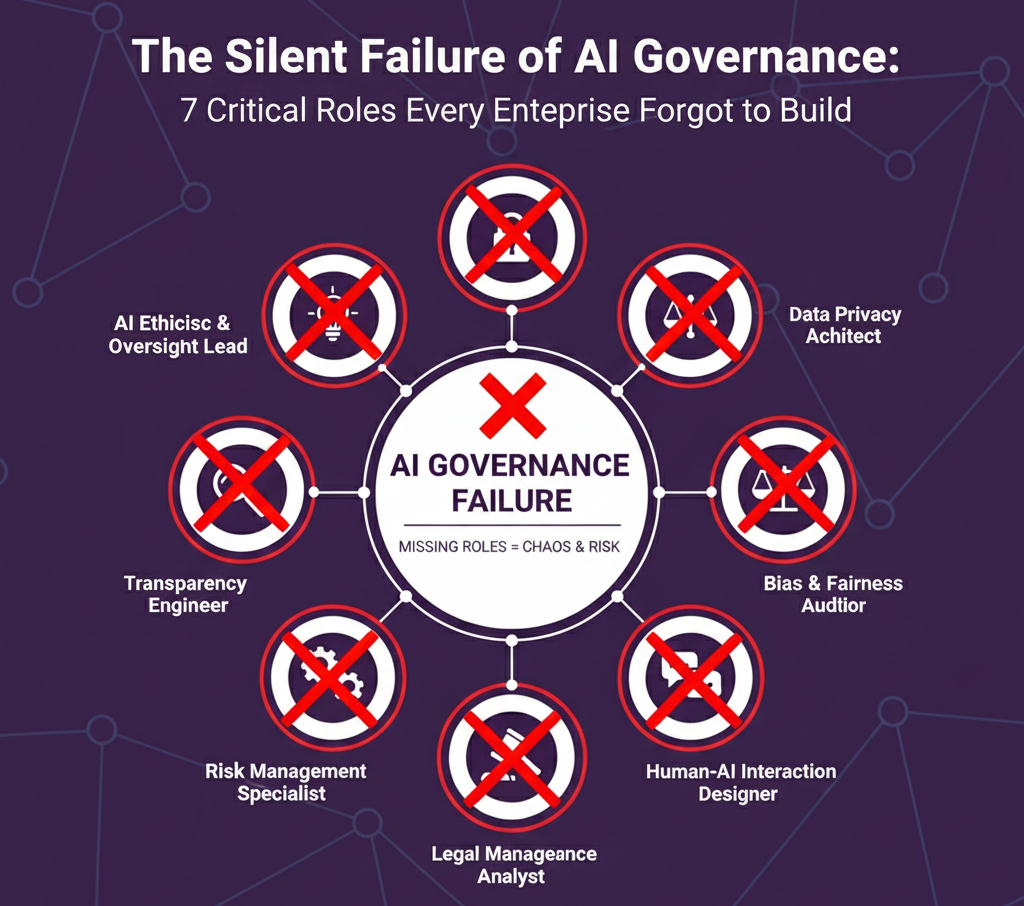

5.1 Fragmentation of Responsibility

Algorithmic systems distribute responsibility across:

- data providers

- model designers

- system deployers

- frontline operators

No single actor holds full ownership of decisions.

5.2 Opacity and Technical Shielding

Complex models are:

- difficult to explain

- inaccessible to non-specialists

- protected by claims of proprietary secrecy

Opacity becomes a governance feature, not a flaw.

5.3 Procedural Legitimacy Without Substantive Justice

Decisions are legitimized by adherence to procedure (“the system was followed”), regardless of outcome quality or fairness.

6. Case Domains of Algorithmic Governance

6.1 Welfare and Social Policy

Automated eligibility systems determine:

- benefit access

- fraud risk

- service prioritization

Errors disproportionately affect vulnerable populations, yet accountability mechanisms remain weak.

6.2 Policing and Security

Predictive systems guide:

- patrol deployment

- surveillance priorities

- threat assessment

These systems embed historical bias while presenting outputs as neutral risk assessments.

6.3 Migration and Border Control

Algorithmic tools assess:

- visa applications

- asylum risk

- border enforcement priorities

Decisions with life-altering consequences are reduced to scores and classifications.

6.4 Public Health and Crisis Management

During crises, algorithmic models inform:

- resource allocation

- risk thresholds

- emergency measures

Model uncertainty is often concealed behind authoritative projections.

7. Depoliticization Through Automation

Algorithmic governance reframes political decisions as technical necessities.

Contested questions—Who deserves support? Who poses risk? Who gets priority?—are transformed into:

“What does the model say?”

This shift removes democratic deliberation from governance while preserving institutional control.

8. Accountability as a Design Choice

The accountability crisis is not inevitable. It is the result of choices:

- to prioritize efficiency over explanation

- to value insulation over responsibility

- to treat governance as optimization rather than judgment

Algorithmic governance could be designed differently—but only if accountability is treated as a core requirement, not an afterthought.

9. Toward Accountable Algorithmic Governance

Reclaiming accountability requires structural interventions:

- clear attribution of decision ownership

- mandatory human responsibility for algorithmic outcomes

- enforceable rights to explanation and contestation

- institutional consequences for algorithmic harm

- democratic oversight of system deployment

Without these, algorithmic governance risks becoming governance without accountability.

10. Conclusion

Algorithmic governance does not eliminate power; it reorganizes it. Decision-making becomes faster, more scalable, and less visible, while responsibility becomes diffuse and elusive.

The crisis of accountability is not a technical malfunction—it is a political condition produced by the automation of governance.

Recognizing this condition is the first step toward resisting a future in which decisions are made everywhere, yet no one is responsible anywhere.

Humainalabs situates itself at this critical juncture, insisting that governance—algorithmic or otherwise—must remain answerable to the people it governs.

Keywords

Algorithmic Governance, Accountability, AI Policy, Public Decision-Making, Power, Automation